What if we could use advanced AI to drastically improve our ability to model and control everything from the electricity grid to our immune systems?

Defined by our Programme Directors (PDs), opportunity spaces are areas we believe are likely to yield breakthroughs.

In Mathematics for Safe AI, we are exploring how to leverage mathematics and scientific modelling to advance transformative AI and provide a basis for provable safety.

Beliefs

The core beliefs that underpin this opportunity space:

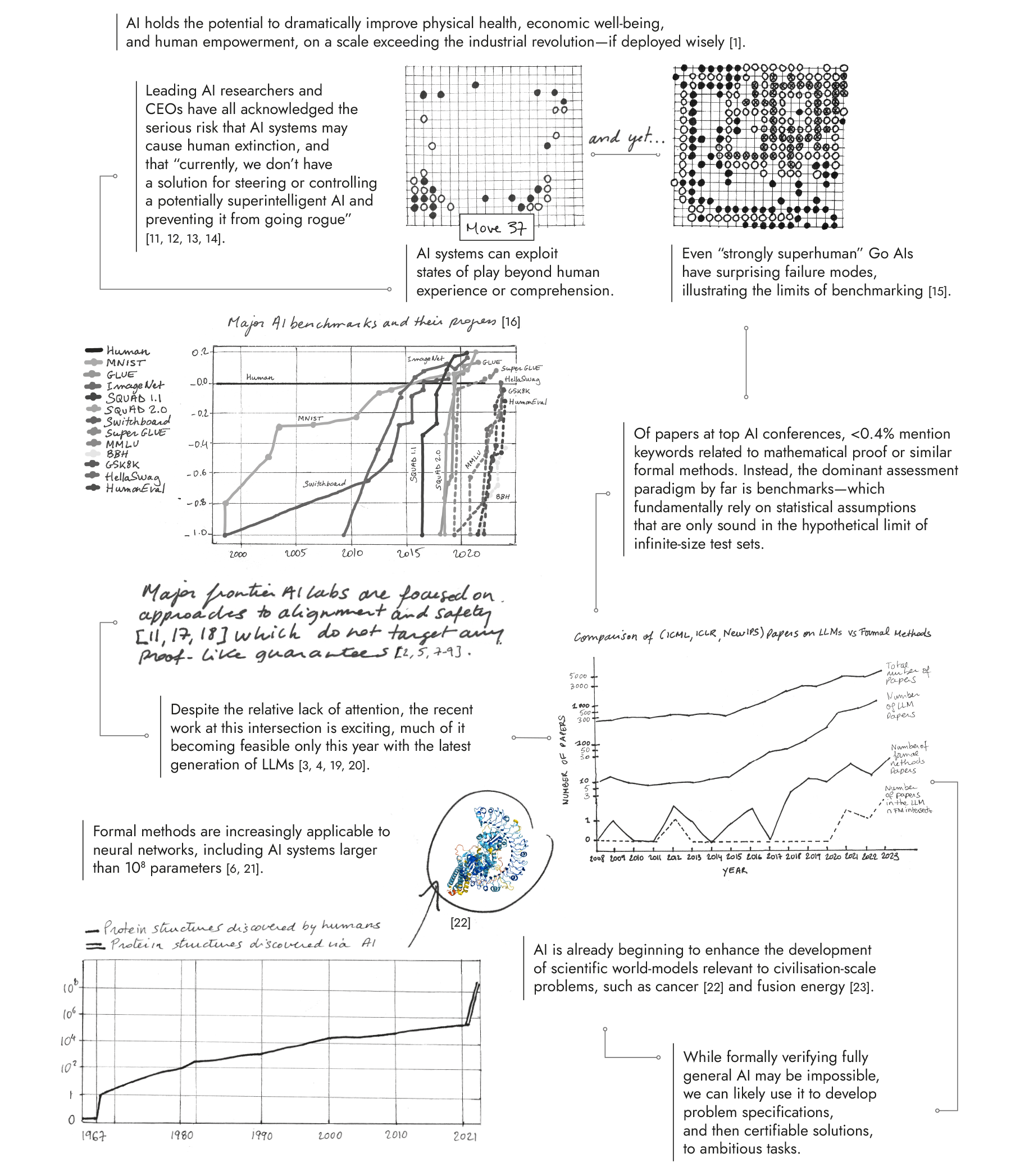

Future AI systems will be powerful enough to transformatively enhance or threaten human civilisation at a global scale —> we need as-yet-unproven technologies to certify that cyber-physical AI systems will deliver intended benefits while avoiding harms.

Given the potential of AI systems to anticipate and exploit world-states beyond human experience or comprehension, traditional methods of empirical testing will be insufficiently reliable for certification —> mathematical proof offers a critical but underexplored foundation for robust verification of AI.

It will eventually be possible to build mathematically robust, human-auditable models that comprehensively capture the physical phenomena and social affordances that underpin human flourishing —> we should begin developing such world models today to advance transformative AI and provide a basis for provable safety

Programme: Safeguarded AI

To build a programme within an opportunity space, our Programme Directors direct the review, selection, and funding of a portfolio of projects.

Backed by £59m, this programme looks to combine scientific world models and mathematical proofs ARIA is looking to construct a ‘gatekeeper’ – an AI system designed to understand and reduce the risks of other AI agents. If successful, we’ll unlock the full economic and social benefits of advanced AI systems while minimising risks.

Opportunity seeds

Outside the scope of programmes and with budgets of up to £500k, these opportunity seeds support ambitious research aligned to the Mathematics for Safe AI opportunity space.

We’re funding 15 opportunity seeds exploring different mathematical approaches to help us verify, understand, and control AI systems.

Formalising and Mitigating the ‘Eliciting Latent Knowledge’ Problem

Francis Rhys Ward, Dr Francis Rhys Ward's Research Group

End-to-End Verification for Constraint Programming

Ciaran McCreesh

SFBench

Jason Gross, Theorem + Redwood Research

Hardware-Level AI Safety Verification

Edoardo Manino, University of Manchester; Mirco Giacobbe, University of Birmingham

Dovetail Research

Alfred Harwood, Dovetail Research

FV-Spec: A Large-Scale Benchmark For Formal Verification of Software

Mike Dodds + Ledah Casburn, Galois

Human Inductive Bias Project

Chris Pang, Meridian

Investigate the feasibility of using LLMs to scalably produce Large Logic-based Expert Systems (LLES)

Joar Skalse

Singular Learning Theory for Safe AI Agents

Daniel Murfet, Timaeus

Learning-theoretic AI Alignment Research Agenda

Vanessa Kosoy, CORAL

Combining Physical and Intentional Stance for Safe AI

Martin Biehl, Cross Labs, Cross Compass; Manuel Baltieri, Araya Inc.; Nathaniel Virgo, University of Hertfordshire

SafePlanBench & Logically Constrained Reinforcement Learning

Agustín Martinez Suñé, University of Oxford

GFlowNet-Steered Probabilistic Program Synthesis for Safer AI

Sam Staton, Nikolay Malkin + Younesse Kaddar, University of Oxford

SCRY-AI: Self-operating Calculations of Risk for Yielding Accurate Insights

Vehbi Deger Turan, Metaculus

Extraction of Structured Knowledge from Language for Scientific Discovery

Nikolay Malkin + Henry Gouk, University of Edinburgh

This opportunity space is part of our rolling seed call experiment – see what's in scope for opportunity seeds in this space by reading the original call for proposals and apply at the link below.

Sign up for updates

Stay up-to-date on our opportunity spaces and programmes, be the first to know about our funding calls and get the latest news from ARIA.