Mathematics for Safe AI

We don’t yet have known technical solutions to ensure that powerful AI systems interact as intended with real-world systems and populations. A combination of scientific world-models and mathematical proofs may be the answer to ensuring AI provides transformational benefit without harm.

What is an opportunity space?

Opportunity spaces are areas of research that we believe are ripe for breakthroughs. They are defined by our Programme Directors, and must be highly consequential for society, under-explored relative to its potential impact, and ripe for new talent, perspectives, or resources to change what’s possible.

Core beliefs

The core beliefs that underpin this opportunity space:

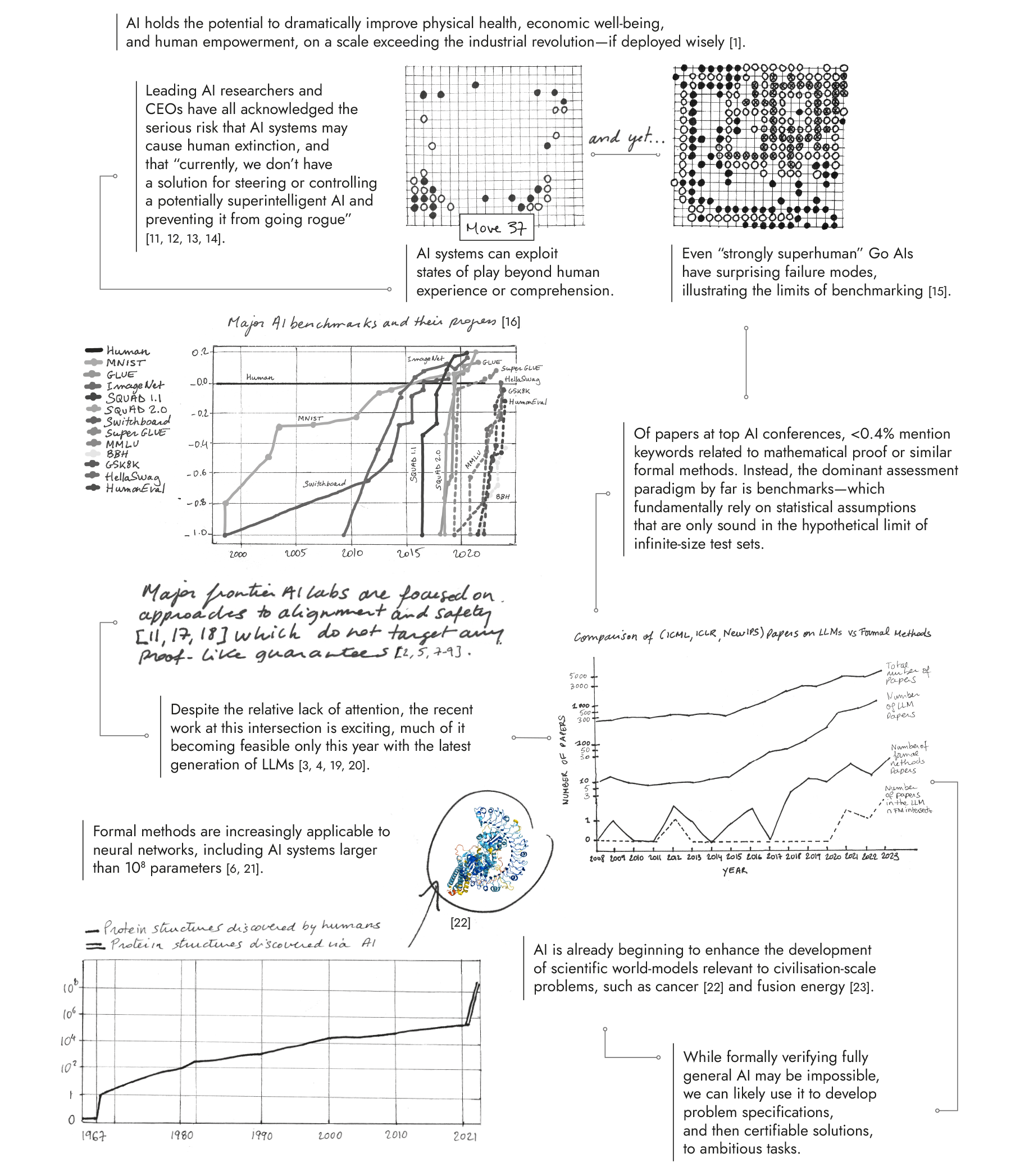

Future AI systems will be powerful enough to transformatively enhance or threaten human civilisation at a global scale —> we need as-yet-unproven technologies to certify that cyber-physical AI systems will deliver intended benefits while avoiding harms.

Given the potential of AI systems to anticipate and exploit world-states beyond human experience or comprehension, traditional methods of empirical testing will be insufficiently reliable for certification —> mathematical proof offers a critical but underexplored foundation for robust verification of AI.

It will eventually be possible to build mathematically robust, human-auditable models that comprehensively capture the physical phenomena and social affordances that underpin human flourishing —> we should begin developing such world models today to advance transformative AI and provide a basis for provable safety

Observations

Some signposts as to why we see this area as important, underserved, and ripe.

Programme spotlight: Safeguarded AI

As AI becomes more capable, it has the potential to power scientific breakthroughs, enhance global prosperity, and safeguard us from disasters. But only if it’s deployed wisely.

Current techniques working to mitigate the risk of advanced AI systems have serious limitations, as they can’t be relied upon in practice to ensure reliability and safety.

Backed by £59m, this programme looks to combine scientific world models and mathematical proofs ARIA is looking to construct a ‘gatekeeper’ – an AI system designed to understand and reduce the risks of other AI agents. If successful, we’ll unlock the full economic and social benefits of advanced AI systems while minimising risks.

Meet the programme team

Our Programme Directors are supported by a Programme Specialist (P-Spec) and Technical Specialist (T-Spec); this is the nucleus of each programme team. P-Specs co-ordinate and oversee the project management of their respective programmes, whilst T-Specs provide highly specialised and targeted technical expertise to support programmatic rigour.

David 'davidad' Dalrymple

Programme Director

davidad is a software engineer with a multidisciplinary scientific background. He’s spent five years formulating a vision for how mathematical approaches could guarantee reliable and trustworthy AI. Before joining ARIA, davidad co-invented the top-40 cryptocurrency Filecoin and worked as a Senior Software Engineer at Twitter.

Yasir Bakki

Programme Specialist

Yasir is an experienced programme manager whose background spans the aviation, tech, emergency services, and defence sectors. Before joining ARIA, he led transformation efforts at Babcock for the London Fire Brigade’s fleet and a global implementation programme at a tech start-up. He supports ARIA as an Operating Partner from Pace.

Nora Ammann

Technical Specialist

Nora is an interdisciplinary researcher with expertise in complex systems, philosophy of science, political theory and AI. She focuses on the development of transformative AI and understanding intelligent behavior in natural, social, or artificial systems. Before ARIA, she co-founded and led PIBBSS, a research initiative exploring interdisciplinary approaches to AI risk, governance and safety.

Explore our other opportunity spaces

Our opportunity spaces are designed as an open invitation for researchers from across disciplines and institutions to learn with us and contribute – a variety of perspectives are just what we need to change what’s possible.